|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

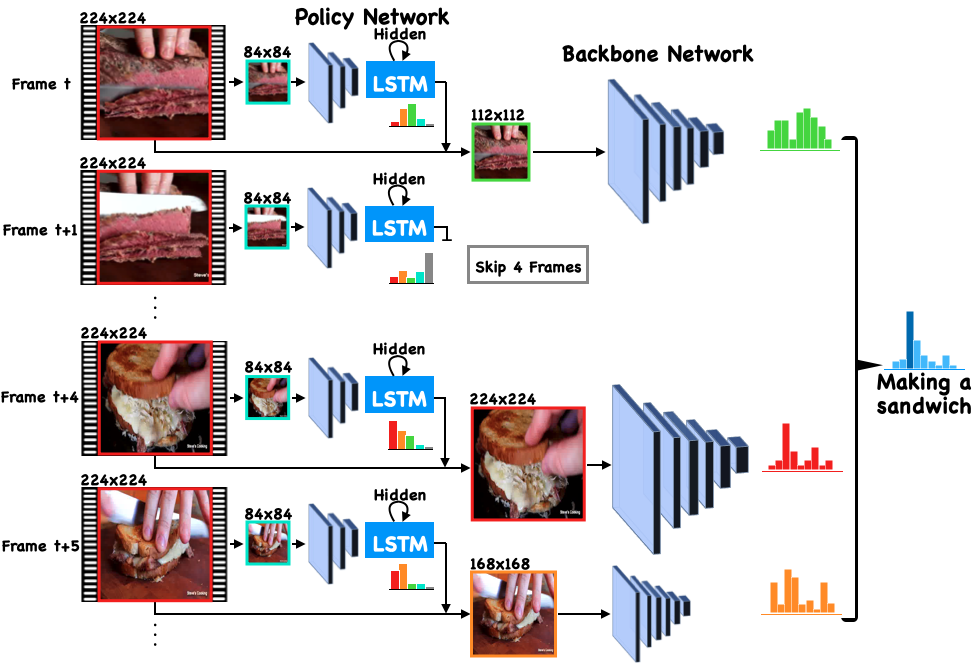

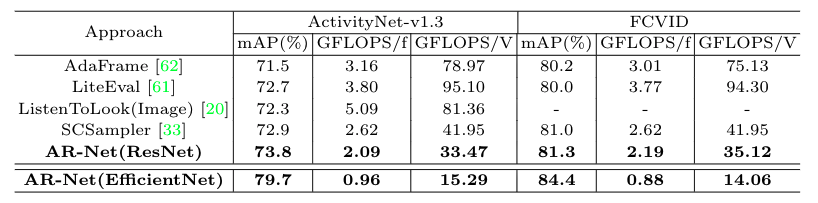

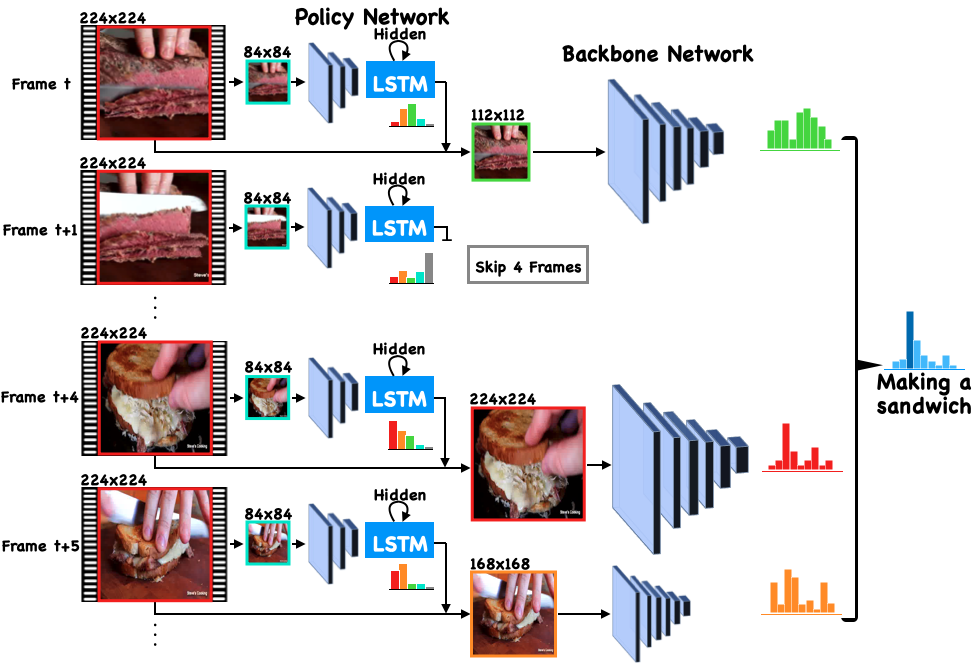

Yue Meng, Chung-Ching Lin, Rameswar Panda, Prasanna Sattigeri, Leonid Karlinsky, Aude Oliva, Kate Saenko, and Rogerio Feris. ARNet: Adaptive Frame Resolution for Efficient Action Recognition European Conference on Computer Vision (ECCV), 2020 [PDF][Code] |