|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

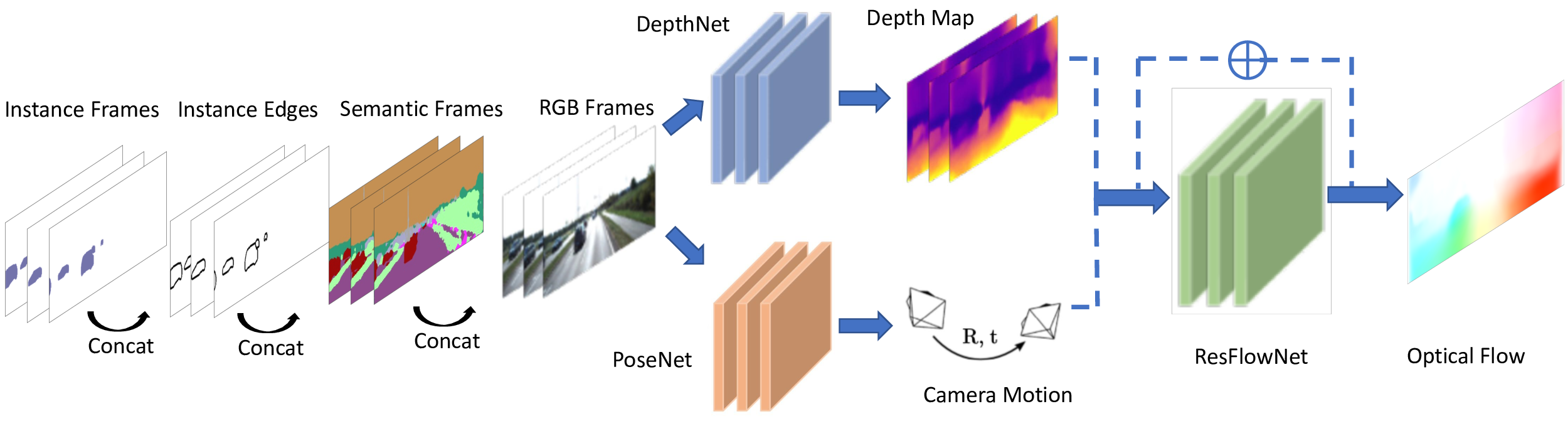

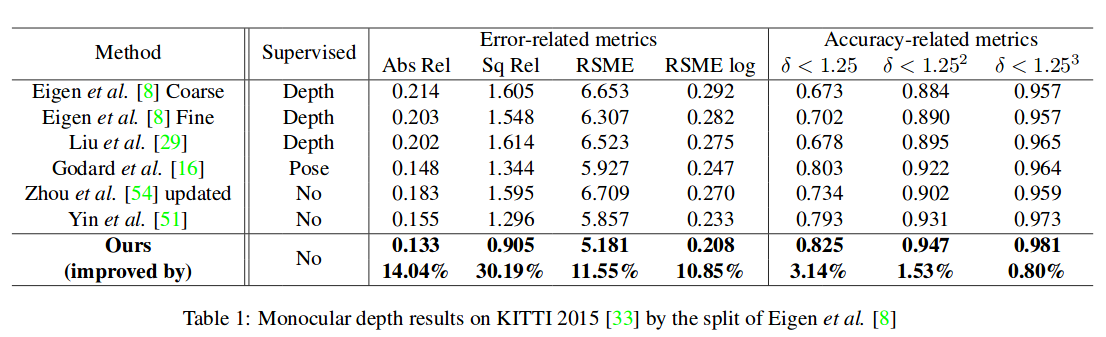

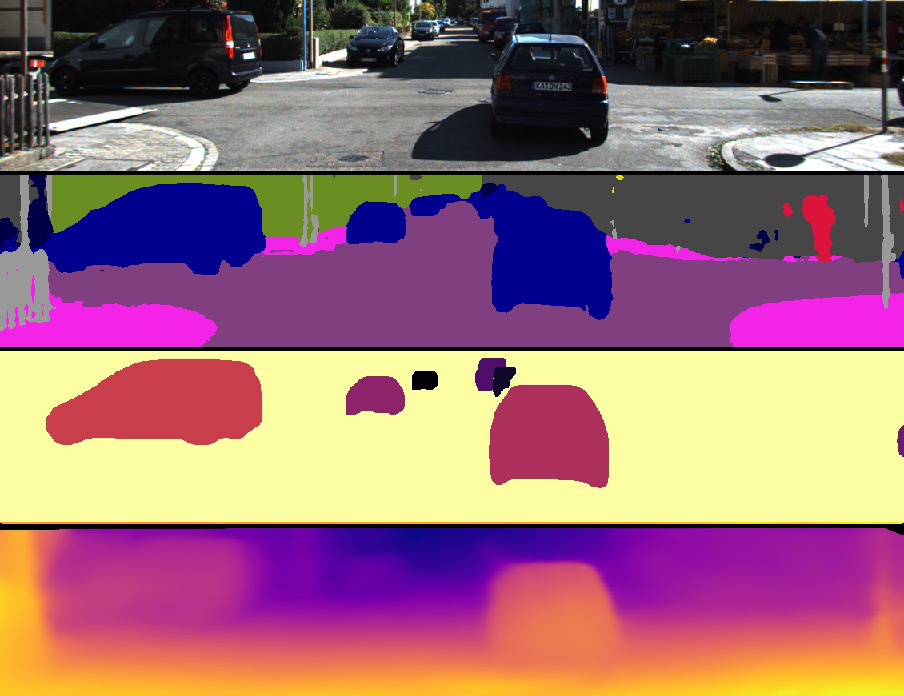

Yue Meng, Yongxi Lu, Aman Raj, Samuel Sunarjo, Rui Guo, Tara Javidi, Gaurav Bansal, and Dinesh Bharadia. SIGNet: Semantic Instance Aided Unsupervised 3D Geometry Perception IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019 [PDF][Code] |